Come comment on this article: Arlo announces an upgraded 4K wireless security camera

Arlo announces an upgraded 4K wireless security camera appeared first on http://www.talkandroid.com

Come comment on this article: Arlo announces an upgraded 4K wireless security camera

Come comment on this article: Amazon’s 12 Days of Deals promotion starts December 2nd

Come comment on this article: OnePlus 6T review: Flagship phone, flagship price

Come comment on this article: YouTube Stories are live for creators with 10K+ subscribers

Come comment on this article: [Deal] Grab a saving on these Anker accessories in the US and the UK

Come comment on this article: DJI announces a stabilized 4K pocket camera for video on the go

Come comment on this article: [TA Deals] Save on the portable Okcel Sirius B mini PC! (28% off!)

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory have created a system that can reproduce paintings from a single photo, allowing museums and art lovers to snap their favorite pictures and print new copies complete with paint textures.

Called RePaint, the project uses machine learning to recreate the exact colors of each painting and then prints it using a high-end 3D printer that can output thousands of colors using half-toning.

The researchers, however, found a better way to capture a fuller spectrum of Degas and Dali. They used a special technique they developed called “color-contoning”, which involves using a 3-D printer and 10 different transparent inks stacked in very thin layers, much like the wafers and chocolate in a Kit-Kat bar. They combined their method with a decades-old technique called “halftoning”, where an image is created by tons of little ink dots, rather than continuous tones. Combining these, the team says, better captured the nuances of the colors.

“If you just reproduce the color of a painting as it looks in the gallery, it might look different in your home,” said researcher Changil Kim. “Our system works under any lighting condition, which shows a far greater color reproduction capability than almost any other previous work.”

Sadly the prints are only about as big as a business card. The system also can’t yet support matte finishes and detailed surface textures but the team is working on improving the algorithms and the 3D printing tech so you’ll finally be able to recreate that picture of dogs playing poker in 3D plastic.

Come comment on this article: Google Assistant and Smart Displays learn some new tricks ahead of the holidays

Come comment on this article: T-Mobile MONEY is the Un-Carrier’s new mobile-only banking service

Come comment on this article: Upcoming Moto G7 Power features a massive 5,000mAh battery

Come comment on this article: [TA Deals] Keep your digital life secure with a Vault bundle subscription, including NordVPN and Dashlane

Before we send any planet-trotting robot to explore the landscape of Mars or Venus, we need to test it here on Earth. Two such robotic platforms being developed for future missions are undergoing testing at European Space Agency facilities: one that rolls, and one that hops.

The rolling one is actually on the books to head to the Red Planet as part of the ESA’s Mars 2020 program. It’s just wrapped a week of testing in the Spanish desert, just one of many Mars analogs space programs use. It looks nice. The gravity’s a little different, of course, and there’s a bit more atmosphere, but it’s close enough to test a few things.

The team controlling Charlie, which is what they named the prototype, was doing so from hundreds of miles away, in the U.K. — not quite an interplanetary distance, but they did of course think to simulate the delay operators would encounter if the rover were actually on Mars. It would also have a ton more instruments on board.

Exploration and navigation was still done entirely using information collected by the rover via radar and cameras, and the rover’s drill was also put to work. It rained one day, which is extraordinarily unlikely to happen on Mars, but the operators presumably pretended it was a dust storm and rolled with it.

Another Earth-analog test is scheduled for February in Chile’s Atacama desert. You can learn more about the ExoMars rover and the Mars 2020 mission here.

The other robot that the ESA publicized this week isn’t theirs but was developed by ETH Zurich: the SpaceBok — you know, like springbok. The researchers there think that hopping around like that well-known ungulate could be a good way to get around on other planets.

It’s nice to roll around on stable wheels, sure, but it’s no use when you want to get to the far side of some boulder or descend into a ravine to check out an interesting mineral deposit. SpaceBok is mean to be a highly stable jumping machine that can traverse rough terrain or walk with a normal quadrupedal gait as needed (well, normal for robots).

“This is not particularly useful on Earth,” admits SpaceBok team member Elias Hampp, but “it could reach a height of four meters on the Moon. This would allow for a fast and efficient way of moving forward.”

It was doing some testing at the ESA’s “Mars Yard sandbox,” a little pen filled with Mars-like soil and rocks. The team is looking into improving autonomy with better vision — the better it can see where it lands, the better SpaceBok can stick that landing.

Interplanetary missions are very much in vogue now, and we may soon even see some private trips to the Moon and Mars. So even if NASA or the ESA doesn’t decide to take SpaceBok (or some similarly creative robot) out into the solar system, perhaps a generous sponsor will.

Come comment on this article: Alldocube announces the powerful M8 LTE-enabled tablet

Come comment on this article: Plex and Tidal announce new partnership to bring high quality music streaming to your media server

Jeffrey Martin takes massive panoramic photographs of the world and his photos let you go from from the panoramic to the intimate in a single mouse swipe. Now he’s truly outdone himself with a 900,000 pixel wide photo of Prague’s Old Town that took six months to build.

The photo, viewable here, has a total spherical resolution of 405 gigapixels and amazing. Martin used a 600mm lens and 50MP DSLR to take photos of nearly everything in the Old Town. You can see the Cathedral, Castle Hill, and even spot street signs, building signs, and pigeons. It’s a fascinating view of a beautiful city.

Martin said it took him over six months to post-process the picture and it required thousands of photos and tweaks. He said the files are six times bigger than anything Photoshop can manage so he found himself working with delicate fixes as he stitched this amazing photo together.

Come comment on this article: Chrome OS will soon get the choice of viewing the mobile version of a website

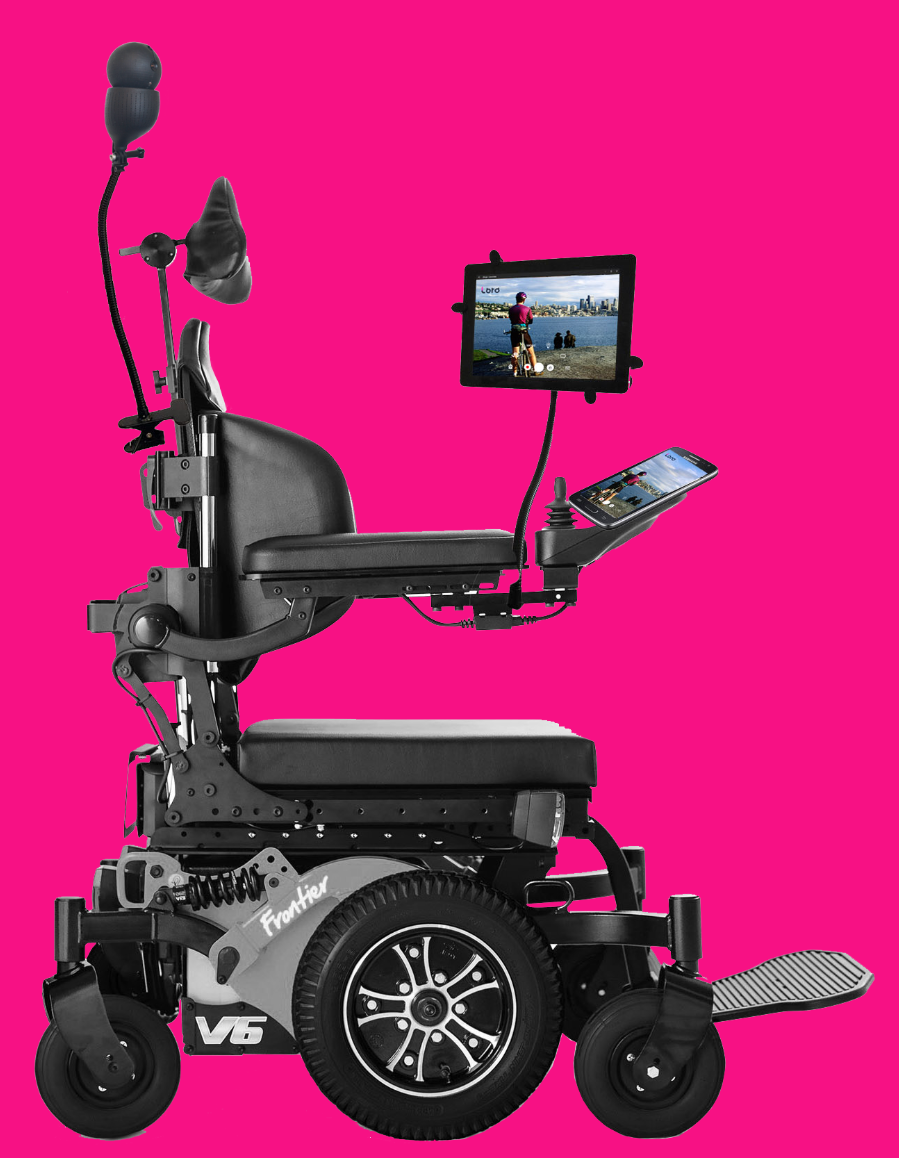

A person with physical disabilities can’t interact with the world the same way as the able, but there’s no reason we can’t use tech to close that gap. Loro is a device that mounts to a wheelchair and offers its occupant the ability to see and interact with the people and things around them in powerful ways.

Loro’s camera and app work together to let the user see farther, read or translate writing, identify people, gesture with a laser pointer and more. They demonstrated their tech onstage today during Startup Battlefield at TechCrunch Disrupt Berlin.

Invented by a team of mostly students who gathered at Harvard’s Innovation Lab, Loro began as a simple camera for disabled people to more easily view their surroundings.

Invented by a team of mostly students who gathered at Harvard’s Innovation Lab, Loro began as a simple camera for disabled people to more easily view their surroundings.

“We started this project for our friend Steve,” said Loro co-founder and creative director, Johae Song. A designer like her and others in their friend group, he was diagnosed with Amyotrophic Lateral Sclerosis, or ALS, a degenerative neural disease that paralyzes the muscles of the afflicted. “So we decided to come up with ideas of how to help people with mobility challenges.”

“We started with just the idea of a camera attached to the wheelchair, to give people a panoramic view so they can navigate easily,” explained co-founder David Hojah. “We developed from that idea after talking with mentors and experts; we did a lot of iterations, and came up with the idea to be smarter, and now it’s this platform that can do all these things.”

It’s not simple to design responsibly for a population like ALS sufferers and others with motor problems. The problems they may have in everyday life aren’t necessarily what one would think, nor are the solutions always obvious. So the Loro team determined to consult many sources and expend a great deal of time in simple observation.

“Very basic observation — just sit and watch,” Hojah said. “From that you can get ideas of what people need without even asking them specific questions.”

Others would voice specific concerns without suggesting solutions, such as a flashlight the user can direct through the camera interface.

“People didn’t say, ‘I want a flashlight,’ they said ‘I can’t get around in the dark.’ So we brainstormed and came up with the flashlight,” he said. An obvious solution in some ways, but only through observation and understanding can it be implemented well.

The focus is always on communication and independence, Song said, and users are the ones who determine what gets included.

“We brainstorm together and then go out and user test. We realize some features work, others don’t. We try to just let them play with it and see what features people use the most.”

There are assistive devices for motor-impaired people out there already, Song and Hojah acknowledged, but they’re generally expensive, unwieldy and poorly designed. Hojah’s background is in medical device design, so he knows of what he speaks.

Consequently, Loro has been designed to be as accessible as possible, with a tablet interface that can be navigated using gaze tracking (via a Tobii camera setup) or other inputs like joysticks and sip-and-puff tubes.

The camera can be directed to, for example, look behind the wheelchair so the user can safely back up. Or it can zoom in on a menu that’s difficult to see from the user’s perspective and read the items off. The laser pointer allows a user with no ability to point or gesture to signal in ways we take for granted, such as choosing a pastry from a case. Text to speech is built right in, so users don’t have to use a separate app to speak out loud.

The camera also tracks faces and can recognize them from a personal (though for now, cloud-hosted) database for people who need help tracking those with whom they interact. The best of us can lose a name or fail to place a face — honestly, I wouldn’t mind having a Loro on my shoulder during some of our events.

Right now the team is focused on finalizing the hardware; the app and capabilities are mostly finalized but the enclosure and so on need to be made production-ready. The company itself is very early-stage — they just incorporated a few months ago and worked with $100,000 in pre-seed funding to create the prototype. Next up is doing a seed round to get ready to manufacture.

“The whole team, we’re really passionate about empowering these people to be really independent, not just waiting for help from others,” Hojah said. Their driving force, he made clear, is compassion.

[gallery ids="1752219,1752224,1752228,1752229,1752230"]

For $799 you can start mining cryptocurrencies in your home, a feat that previously either required a massive box costing thousands of dollars or, if you didn’t actually want to make any money, a Raspberry Pi. The Coinmine One, created by Farbood Nivi, soundly hits the sweet spot between actual mining and experimentation.

The box is about as big as a gaming console and runs a custom OS called MineOS. The system lets you pick a cryptocurrency to mine – Monero, for example, as the system isn’t very good with mature, ASIC-dependent currencies like BTC – and then runs it on the built in CPU and GPU. The machine contains a Intel Celeron Processor J Series processor and a AMD Radeon RX570 graphics card for mining. It also has a 1 TB drive to hold the massive blockchains required to manage these currencies.

The box mines Ethereum at 29 Mh/s and Monero at 800 h/s – acceptable numbers for an entry level miner like this one. You can upgrade it to support new coins, allowing you to get in on the ground floor of whatever weird thing crypto folks create tomorrow.

I saw the Coinmine in Brooklyn and it looks nice. It’s a cleverly-made piece of consumer tech that brings the mystery of crypto mining to the average user. Nivi doesn’t see this as a profit-making machine. Instead, it is a tool to help crypto experimenters try to mine new currencies and run a full node on the network. That doesn’t mean you can’t get Lambo with this thing, but expect Lambo to take a long, long time.

The device ships next month to hungry miners world-wide. It’s a fascinating move for the average user to experience the thrills and spills of the recent crypto bust.

Amazon today announced AWS DeepRacer, a fully autonomous 1/18th-scale race car that aims to help developers learn machine learning. Priced at $399 but currently offered for $249, the race car lets developers get hands-on — literally — with a machine learning technique called reinforcement learning (RL).

RL takes a different approach to training models than other machine learning techniques, Amazon explained.

It’s a type of machine learning that works when an “agent” is allowed to act on a trial-and-error basis within an interactive environment. It does so using feedback from those actions to learn over time in order to reach a predetermined goal or to maximize some type of score or reward.

This makes it different from other machine learning techniques — like Supervised Learning, for example — as it doesn’t require any labeled training data to get started, and it can make short-term decisions while optimizing for a long-term goal.

The new race car lets developers experiment with RL by learning through autonomous driving.

We are pleased and excited to announce AWS DeepRacer and the AWS DeepRacer League. New reinforcement learning powered AWS service and racing league ML powered cars. #reInvent pic.twitter.com/9Qmmqj4Ebw

— AWS re:Invent (@AWSreInvent) November 28, 2018

Developers first get started using a virtual car and tracks in a cloud-based 3D racing simulator, powered by AWS RoboMaker. Here, they can train an autonomous driving model against a collection of predefined race tracks included with the simulator, then evaluate them virtually or choose to download them to the real-world AWS DeepRacer car.

They can also opt to participate in the first AWS DeepRacer League at the re:Invent conference, where the car was announced. This event will take place over the next 24 hours in the AWS DeepRacer workshops and at the MGM Speedway and will involve using Amazon SageMaker, AWS RoboMaker and other AWS services.

There are six main tracks, each with a pit area, a hacker garage and two extra tracks developers can use for training and experimentation. There will also be a DJ.

There are six main tracks, each with a pit area, a hacker garage and two extra tracks developers can use for training and experimentation. There will also be a DJ.

The league will continue after the event, as well, with a series of live racing events starting in 2019 at AWS Global Summits worldwide. Virtual tournaments will also be hosted throughout the year, Amazon said, with the goal of winning the AWS DeepRacer 2019 Championship Cup at re:invent 2019.

As for the car’s hardware itself, it’s a 1/18th-scale, radio-controlled, four-wheel drive vehicle powered by an Intel Atom processor. The processor runs Ubuntu 16.04 LTS, ROS (Robot Operating System) and the Intel OpenVino computer vision toolkit.

The car also includes a 4 megapixel camera with 1080p resolution, 802.11ac Wi-Fi, multiple USB ports and battery power that will last for about two hours.

It’s available for sale on Amazon here.

Come comment on this article: LG appoints new head of mobile division in bid to stem losses

If you’ve ever wanted to add creepy, 3D-printed hands to your creepy robot dog, YouBionic has you covered. This odd company is offering an entirely 3D-printed arm solution for the Boston Dynamics SpotMini, the doglike robot that already has an arm of its own. YouBionic is selling the $179 3D models for the product that you can print and assemble yourself.

This solution is very skimpy on the details but as you can see it essentially turns the SpotMini into a robotic centaur, regal and majestic as those mythical creatures are. There isn’t much description of how the system will work in practice – the STLs include the structural parts but not the electronics. That said, it’s a fascinating concept and could mean the beginning of a truly component-based robotics solution.

Come comment on this article: Sprint and HTC set to release a 5G capable smart hub early next year

Come comment on this article: [TA Deals] Master the Raspberry Pi with this complete starter kit and course bundle! (68% off)

In September, Amazon launched its Alexa Gadgets Toolkit into beta, allowing hardware makers to build accessories that pair with Amazon Echo over Bluetooth. Today, one of the most memorable (and quite ridiculous) examples of that technology is going live. Yes, I’m talking about the Alexa-enabled Big Mouth Billy Bass, of course. You know, the talking fish that hangs on the wall, and has now been updated to respond to Alexa voice commands?

Amazon first showed off this technology over a year ago at an event at its Seattle headquarters, then this fall confirmed the talking fish would be among the debut products to use its new Alexa Gadgets Toolkit.

The toolkit lets developers build Alexa-connected devices that use things like lights, sound chips or even motors, in order to work with Alexa interfaces like notifications, timers, reminders, text-to-speech, and wake word detection.

The talking fish can actually do much of that.

According to the company’s announcement, Big Mouth Billy Bass can react to timers, notifications, and alarms, and can play Amazon Music. It can also lip sync to Alexa spoken responses when asked for information about the weather, news, or random facts.

And it will sing an original song, “Fishin’ Time.”

When the gadget is plugged in and turned on, it responds: “Woo-hoo, that feels good!”

(Oh my god, who is getting this for me for Christmas?)

“This is not your father’s Big Mouth Billy Bass,” said Vice President of Product Development at Gemmy Industries, Steven Harris, in a statement about the product’s launch. “Our new high-tech version uses the latest technology from Amazon to deliver a hilarious and interactive gadget that takes everyday activities to a fun new level.”

The fish can be wall-mounted on displayed using an included tabletop easel, the company also says.

The pop culture gag gift was first sold back in 1999, and is now updating is brand for the Alexa era.

Obviously, Big Mouth Billy Bass is not a product that was ever designed to be taken seriously – but it should be interesting to see if the updated, “high-tech version” has any impact on this item’s sales.

The idea to integrate Alexa into the talking fish actually began in 2016, when an enterprising developer hacked the fish to work with Alexa much to the internet’s delight. His Facebook post showcasing his work attracted 1.8 million views.

The Alexa-connected fish is $39.99 on Amazon.com.

(h/t Business Insider)

Come comment on this article: Second phase of Samsung’s Android Pie beta goes live in the UK

Come comment on this article: Huawei wants to release a phone with a camera screen cutout before Samsung

Come comment on this article: Sony Xperia XZ4 leaks, packs triple cameras and no screen notch

Come comment on this article: Samsung stuffing up 12GB of RAM, 1TB of storage in the Galaxy S10

Come comment on this article: [TA Deals] Improve your smartphone photos with the discounted RevolCam!

Come comment on this article: YouTube Music gets a discounted student subscription to stay competitive with Spotify

Come comment on this article: OnePlus and McLaren announce partnership – could launch special edition OP6T on December 11th

Last night’s 10 minutes of terror as the InSight Mars Lander descended to the Martian surface at 12,300 MPH were a nail-biter for sure, but now the robotic science platform is safe and sound — and has sent pics back to prove it.

The first thing it sent was a couple pictures of its surroundings: Elysium Planitia, a rather boring-looking, featureless plane that is nevertheless perfect for InSight’s drilling and seismic activity work.

The images, taken with its Instrument Context Camera, are hardly exciting on their own merits — a dirty landscape viewed through a dusty tube. But when you consider that it’s of an unexplored territory on a distant planet, and that it’s Martian dust and rubble occluding the lens, it suddenly seems pretty amazing!

Decelerating from interplanetary velocity and making a perfect landing was definitely the hard part, but it was by no means InSight’s last challenge. After touching down, it still needs to set itself up and make sure that none of its many components and instruments were damaged during the long flight and short descent to Mars.

And the first good news arrived shortly after landing, relayed via NASA’s Odyssey spacecraft in orbit: a partial selfie showing that it was intact and ready to roll. The image shows, among other things, the large mobile arm folded up on top of the lander, and a big copper dome covering some other components.

Telemetry data sent around the same time show that InSight has also successfully deployed its solar panels and its collecting power with which to continue operating. These fragile fans are crucial to the lander, of course, and it’s a great relief to hear they’re working properly.

These are just the first of many images the lander will send, though unlike Curiosity and the other rovers, it won’t be traveling around taking snapshots of everything it sees. Its data will be collected from deep inside the planet, offering us insight into the planet’s — and our solar system’s — origins.

Come comment on this article: Google Pixel 3 leaks start back up with the Pixel 3 Lite

Wildfires are consuming our forests and grasslands faster than we can replace them. It’s a vicious cycle of destruction and inadequate restoration rooted, so to speak, in decades of neglect of the institutions and technologies needed to keep these environments healthy.

DroneSeed is a Seattle-based startup that aims to combat this growing problem with a modern toolkit that scales: drones, artificial intelligence, and biological engineering. And it’s even more complicated than it sounds.

A bit of background first. The problem of disappearing forests is a complex one, but it boils down to a few major factors: climate change, outdated methods, and shrinking budgets (and as you can imagine, all three are related).

Forest fires are a natural occurrence, of course. And they’re necessary, as you’ve likely read, to sort of clear the deck for new growth to take hold. But climate change, monoculture growth, population increases, lack of control burns, and other factors have led to these events taking place not just more often, but more extensively and to more permanent effect.

On average, the U.S. is losing 7 million acres a year. That’s not easy to replace to begin with — and as budgets for the likes of national and state forest upkeep have shrunk continually over the last half century, there have been fewer and fewer resources with which to combat this trend.

The most effective and common reforestation technique for a recently burned woodland is human planters carrying sacks of seedlings and manually selecting and placing them across miles of landscapes. This back-breaking work is rarely done by anyone for more than a year or two, so labor is scarce and turnover is intense.

Even if the labor was available on tap, the trees might not be. Seedlings take time to grow in nurseries and a major wildfire might necessitate the purchase and planting of millions of new trees. It’s impossible for nurseries to anticipate this demand, and the risk associated with growing such numbers on speculation is more than many can afford. One missed guess could put the whole operation underwater.

Meanwhile if nothing gets planted, invasive weeds move in with a vengeance, claiming huge areas that were once old growth forests. Lacking the labor and tree inventory to stem this possibility, forest keepers resort to a stopgap measure: use helicopters to drench the area in herbicides to kill weeds, then saturate it with fast-growing cheatgrass or the like. (The alternative to spraying is, again, the manual approach: machetes.)

At least then, in a year, instead of a weedy wasteland, you have a grassy monoculture — not a forest, but it’ll do until the forest gets here.

One final complication: helicopter spraying is a horrendously dangerous profession. These pilots are flying at sub-100-foot elevations, performing high-speed maneuvers so that their sprays reach the very edge of burn zones but they don’t crash head-on into the trees. This is an extremely dangerous occupation: 80 to 100 crashes occur every year in the U.S. alone.

In short, there are more and worse fires and we have fewer resources — and dated ones at that — with which to restore forests after them.

These are facts anyone in forest ecology and logging are familiar with, but perhaps not as well known among technologists. We do tend to stay in areas with cell coverage. But it turns out that a boost from the cloistered knowledge workers of the tech world — specifically those in the Emerald City — may be exactly what the industry and ecosystem require.

So what’s the solution to all this? Automation, right?

Automation, especially via robotics, is proverbially suited for jobs that are “dull, dirty, and dangerous.” Restoring a forest is dirty and dangerous to be sure. But dull isn’t quite right. It turns out that the process requires far more intelligence than anyone was willing, it seems, to apply to the problem — with the exception of those planters. That’s changing.

Earlier this year, DroneSeed was awarded the first multi-craft, over-55-pounds unmanned aerial vehicle license ever issued by the FAA. Its custom UAV platforms, equipped with multispectral camera arrays, high-end lidar, 6-gallon tanks of herbicide, and proprietary seed dispersal mechanisms have been hired by several major forest management companies, with government entities eyeing the service as well.

These drones scout a burned area, mapping it down to as high as centimeter accuracy, including objects and plant species, fumigate it efficiently and autonomously, identify where trees would grow best, then deploy painstakingly designed seed-nutrient packages to those locations. It’s cheaper than people, less wasteful and dangerous than helicopters, and smart enough to scale to national forests currently at risk of permanent damage.

I met with the company’s team at their headquarters near Ballard, where complete and half-finished drones sat on top of their cases and the air was thick with capsaicin (we’ll get to that).

The idea for the company began when founder and CEO Grant Canary burned through a few sustainable startup ideas after his last company was acquired, and was told, in his despondency, that he might have to just go plant trees. Canary took his friend’s suggestion literally.

“I started looking into how it’s done today,” he told me. “It’s incredibly outdated. Even at the most sophisticated companies in the world, planters are superheroes that use bags and a shovel to plant trees. They’re being paid to move material over mountainous terrain and be a simple AI and determine where to plant trees where they will grow — microsites. We are now able to do both these functions with drones. This allows those same workers to address much larger areas faster without the caloric wear and tear.”

It may not surprise you to hear that investors are not especially hot on forest restoration (I joked that it was a “growth industry” but really because of the reasons above it’s in dire straits).

But investors are interested in automation, machine learning, drones, and especially government contracts. So the pitch took that form. With the money Droneseed secured, it has built its modestly sized but highly accomplished team and produced the prototype drones with which is has captured several significant contracts before even announcing that it exists.

“We definitely don’t fit the mold or metrics most startups are judged on. The nice thing about not fitting the mold is people double take and then get curious,” Canary said. “Once they see we can actually execute and have been with 3 of the 5 largest timber companies in the US for years, they get excited and really start advocating hard for us.”

The company went through Techstars, and Social Capital helped them get on their feet, with Spero Ventures joining up after the company got some groundwork done.

If things go as Droneseed hopes, these drones could be deployed all over the world by trained teams, allowing spraying and planting efforts in nurseries and natural forests to take place exponentially faster and more efficiently than they are today. It’s genuine change-the-world-from-your-garage stuff, which is why this article is so long.

The job at hand isn’t simple or even straightforward. Every landscape differs from every other, not just in the shape and size of the area to be treated but the ecology, native species, soil type and acidity, type of fire or logging that cleared it, and so on. So the first and most important task is to gather information.

For this Droneseed has a special craft equipped with a sophisticated imaging stack. This first pass is done using waypoints set on satellite imagery.

The information collected at this point is really far more detailed than what’s actually needed. The lidar, for instance, collects spatial information at a resolution much beyond what’s needed to understand the shape of the terrain and major obstacles. It produces a 3D map of the vegetation as well as the terrain, allowing the system to identify stumps, roots, bushes, new trees, erosion, and other important features.

This works hand in hand with the multispectral camera, which collects imagery not just in the visible bands — useful for identifying things — but also in those outside the human range, which allows for in-depth analysis of the soil and plant life.

The resulting map of the area is not just useful for drone navigation, but for the surgical strikes that are necessary to make this kind of drone-based operation worth doing in the first place. No doubt there are researchers who would love to have this data as well.

Now, spraying and planting are very different tasks. The first tends to be done indiscriminately using helicopters, and the second by laborers who burn out after a couple years — as mentioned above, it’s incredibly difficult work. The challenge in the first case is to improve efficiency and efficacy, while in the second case is to automate something that requires considerable intelligence.

Spraying is in many ways simpler. Identifying invasive plants isn’t easy, exactly, but it can be done with imagery like that the drones are collecting. Having identified patches of a plant to be eliminated, the drones can calculate a path and expend only as much herbicide is necessary to kill them, instead of dumping hundreds of gallons indiscriminately on the entire area. It’s cheaper and more environmentally friendly. Naturally, the opposite approach could be used for distributing fertilizer or some other agent.

I’m making it sound easy again. This isn’t a plug and play situation — you can’t buy a DJI drone and hit the “weedkiller” option in its control software. A big part of this operation was the creation not only of the drones themselves, but the infrastructure with which to deploy them.

The drones themselves are unique, but not alarmingly so. They’re heavy-duty craft, capable of lifting well over the 57 pounds of payload they carry (the FAA limits them to 115 pounds).

“We buy and gut aircraft, then retrofit them,” Canary explained simply. Their head of hardware, would probably like to think there’s a bit more to it than that, but really the problem they’re solving isn’t “make a drone” but “make drones plant trees.” To that end, Canary explained, “the most unique engineering challenge was building a planting module for the drone that functions with the software.” We’ll get to that later.

DroneSeed deploys drones in swarms, which means as many as five drones in the air at once — which in turn means they need two trucks and trailers with their boxes, power supplies, ground stations, and so on. The company’s VP of operations comes from a military background where managing multiple aircraft onsite was part of the job, and she’s brought her rigorous command of multi-aircraft environments to the company.

The drones take off and fly autonomously, but always under direct observation by the crew. If anything goes wrong, they’re there to take over, though of course there are plenty of autonomous behaviors for what to do in case of, say, a lost positioning signal or bird strike.

They fly in patterns calculated ahead of time to be the most efficient, spraying at problem areas when they’re over them, and returning to the ground stations to have power supplies swapped out before returning to the pattern. It’s key to get this process down pat, since efficiency is a major selling point. If a helicopter does it in a day, why shouldn’t a drone swarm? It would be sad if they had to truck the craft back to a hangar and recharge them every hour or two. It also increases logistics costs like gas and lodging if it takes more time and driving.

This means the team involves several people as well as several drones. Qualified pilots and observers are needed, as well as people familiar with the hardware and software that can maintain and troubleshoot on site — usually with no cell signal or other support. Like many other forms of automation, this one brings its own new job opportunities to the table.

The actual planting process is deceptively complex.

The idea of loading up a drone with seeds and setting it free on a blasted landscape is easy enough to picture. Hell, it’s been done. There are efforts going back decades to essentially load seeds or seedlings into guns and fire them out into the landscape at speeds high enough to bury them in the dirt: in theory this combines the benefits of manual planting with the scale of carpeting the place with seeds.

But whether it was slapdash placement or the shock of being fired out of a seed gun, this approach never seemed to work.

Forestry researchers have shown the effectiveness of finding the right “microsite” for a seed or seedling; in fact, it’s why manual planting works as well as it does. Trained humans find perfect spots to put seedlings: in the lee of a log; near but not too near the edge of a stream; on the flattest part of a slope, and so on. If you really want a forest to grow, you need optimal placement, perfect conditions, and preventative surgical strikes with pesticides.

Although it’s difficult it’s also the kind of thing that a machine learning model can become good at. Sorting through messy, complex imagery and finding local minima and maxima is a specialty of today’s ML systems, and the aerial imagery from the drones is rich in relevant data.

The company’s CTO led the creation of an ML model that determines the best locations to put trees at a site — though this task can be highly variable depending on the needs of the forest. A logging company might want a tree every couple feet even if that means putting them in sub-optimal conditions — but a few inches to the left or right may make all the difference. On the other hand, national forests may want more sparse deployments or specific species in certain locations to curb erosion or establish sustainable firebreaks.

Once the data has been crunched, the map is loaded into the drones’ hive mind and the convoy goes to the location, where the craft are loaded up with seeds instead of herbicides.

But not just any old seeds! You see, that’s one more wrinkle. If you just throw a sagebrush seed on the ground, even if it’s in the best spot in the world, it could easily be snatched up by an animal, roll or wash down to a nearby crevasse, or simply fail to find the right nutrients in time despite the planter’s best efforts.

That’s why DroneSeed’s Head of Planting and his team have been working on a proprietary seed packet that they were unbelievably reticent to detail.

From what I could gather, they’ve put a ton of work into packaging the seeds into nutrient-packed little pucks held together with a biodegradable fiber. The outside is dusted with capsaicin, the chemical that makes spicy food spicy (and also what makes bear spray do what it does). If they hadn’t told me, I might have guessed, since the workshop area was hazy with it, leading us all to cough tear up a little. If I were a marmot, I’d learn to avoid these things real fast.

The pucks, or “seed vessels,” can and must be customized for the location and purpose — you have to match the content and acidity of the soil, things like that. DroneSeed will have to make millions of these things, but it doesn’t plan to be the manufacturer.

Finally these pucks are loaded in a special puck-dispenser which, closely coordinating with the drone, spits one out at the exact moment and speed needed to put it within a few centimeters of the microsite.

All these factors should improve the survival rate of seedlings substantially. That means that the company’s methods will not only be more efficient, but more effective. Reforestation is a numbers game played at scale, and even slight improvements — and DroneSeed is promising more than that — are measured in square miles and millions of tons of biomass.

DroneSeed has already signed several big contracts for spraying, and planting is next. Unfortunately the timing on their side meant they missed this year’s planting season, though by doing a few small sites and showing off the results, they’ll be in pole position for next year.

After demonstrating the effectiveness of the planting technique, the company expects to expand its business substantially. That’s the scaling part — again, not easy, but easier than hiring another couple thousand planters every year.

Ideally the hardware can be assigned to local teams that do the on-site work, producing loci of activity around major forests from which jobs can be deployed at large or small scales. A set of 5 or 6 drones does the work of a helicopter, roughly speaking, so depending on the volume requested by a company or forestry organization you may need dozens on demand.

That’s all yet to be explored, but DroneSeed is confident that the industry will see the writing on the wall when it comes to the old methods, and identify them as a solution that fits the future.

If it sounds like I’m cheerleading for this company, that’s because I am. It’s not often in the world of tech startups that you find a group of people not just attempting to solve a serious problem — it’s common enough to find companies hitting this or that issue — but who have spent the time, gathered the expertise, and really done the dirty, boots-on-the-ground work that needs to happen so it goes from great idea to real company.

That’s what I felt was the case with DroneSeed, and here’s hoping their work pays off — for their sake, sure, but mainly for ours.

Come comment on this article: No surprise, the Huawei Mate 20 Pro is extremely fragile

Come comment on this article: Samsung’s Galaxy S10 cameras get detailed for all four upcoming models

Come comment on this article: [TA Deals] Grab a discounted subscription to Private Internet Access VPN up to 70% off

Come comment on this article: Samsung is working on software update to fix Galaxy Note 9’s camera freezing bug

Come comment on this article: [Deal] Check out Anker’s Cyber Monday Deals in the US and the UK

Come comment on this article: Google’s Fuchsia OS will be tested by Huawei on the Honor Play