Come comment on this article: Samsung’s first-quarter profits took a nosedive, down 60% from last year

Samsung’s first-quarter profits took a nosedive, down 60% from last year appeared first on http://www.talkandroid.com

Come comment on this article: Samsung’s first-quarter profits took a nosedive, down 60% from last year

Come comment on this article: A Galaxy S10 5G has exploded, but Samsung says it’s not their fault

Come comment on this article: Energizer’s massive battery phone is DOA, and it never even got funded

Come comment on this article: [TA Deals] Protect your PC with a discounted GlassWire Elite subscription (89% off)

Come comment on this article: Ten best cases for the Huawei P30 Pro

Come comment on this article: Has Samsung fixed its faulty Galaxy Fold already?

Come comment on this article: OnePlus 7 Pro zoom camera samples are here, but they’re not quite flagship quality

Come comment on this article: Microsoft study reveals smart-things adoption and privacy concerns

Come comment on this article: The crowdfunding campaign for Energizer’s giant battery phone ran out of juice

Come comment on this article: [Deal] Check out Anker’s latest deals as well as the new Atom PD-2 Wall Charger

Amazon announced today it has begun to ask customers to participate in a preview program that will help the company build a Spanish-language Alexa experience for U.S. users. The program, which is currently invite-only, will allow Amazon to incorporate into the U.S. Spanish-language experience a better understanding of things like word choice and local humor, as it has done with prior language launches in other regions. In addition, developers have been invited to begin building Spanish-language skills, also starting today, using the Alexa Skills Kit.

The latter was announced on the Alexa blog, noting that any skills created now will be made available to the customers in the preview program for the time being. They’ll then roll out to all customers when Alexa launches in the U.S. with Spanish-language support later this year.

Manufacturers who want to build “Alexa Built-in” products for Spanish-speaking customers can also now request early access to a related Alexa Voice Services (AVS) developer preview. Amazon says that Bose, Facebook and Sony are preparing to do so, while smart home device makers, including Philips, TP Link and Honeywell Home, will bring to U.S. users “Works with Alexa” devices that support Spanish.

Ahead of today, Alexa had supported Spanish language skills, but only in Spain and Mexico — not in the U.S. Those developers can opt to extend their existing skills to U.S. customers, Amazon says.

In addition to Spanish, developers have also been able to create skills in English in the U.S., U.K., Canada, Australia, and India; as well as in German, Japanese, French (in France and in Canada), and Portuguese (in Brazil). But on the language front, Google has had a decided advantage thanks to its work with Google Voice Search and Google Translate over the years.

Last summer, Google Home rolled out support for Spanish, in addition to launching the device in Spain and Mexico.

Amazon also trails Apple in terms of support for Spanish in the U.S., as Apple added support for Spanish to the HomePod in the U.S., Spain and Mexico in September 2018.

Spanish is a widely spoken language in the U.S. According to a 2015 report by Instituto Cervantes, the United States has the second highest concentration of Spanish speakers in the world, following Mexico. At the time of the report, there were 53 million people who spoke Spanish in the U.S. — a figure that included 41 million native Spanish speakers, and approximately 11.6 million bilingual Spanish speakers.

Come comment on this article: YouTube Music can now play local files, just like your iPod in 2009

Come comment on this article: [TA Deals] Become an AWS expert with the discounted AWS Solutions Architect Certification Bundle

Last night’s episode of “Game of Thrones” was a wild ride and inarguably one of an epic show’s more epic moments — if you could see it through the dark and the blotchy video. It turns out even one of the most expensive and meticulously produced shows in history can fall prey to the scourge of low quality streaming and bad TV settings.

The good news is this episode is going to look amazing on Blu-ray or potentially in future, better streams and downloads. The bad news is that millions of people already had to see it in a way its creators surely lament. You deserve to know why this was the case. I’ll be simplifying a bit here because this topic is immensely complex, but here’s what you should know.

(By the way, I can’t entirely avoid spoilers, but I’ll try to stay away from anything significant in words or images.)

It was clear from the opening shots in last night’s episode, “The Longest Night,” that this was going to be a dark one. The army of the dead faces off against the allied living forces in the darkness, made darker by a bespoke storm brought in by, shall we say, a Mr. N.K., to further demoralize the good guys.

Thematically and cinematographically, setting this chaotic, sprawling battle at night is a powerful creative choice and a valid one, and I don’t question the showrunners, director, and so on for it. But technically speaking, setting this battle at night, and in fog, is just about the absolute worst case scenario for the medium this show is native to: streaming home video. Here’s why.

Video has to be compressed in order to be sent efficiently over the internet, and although we’ve made enormous strides in video compression and the bandwidth available to most homes, there are still fundamental limits.

The master video that HBO put together from the actual footage, FX, and color work that goes into making a piece of modern media would be huge: hundreds of gigabytes if not terabytes. That’s because the master has to include all the information on every pixel in every frame, no exceptions.

Imagine if you tried to “stream” a terabyte-sized video file. You’d have to be able to download 200 megabytes per second for the full 80 minutes of this episode. Few people in the world have that kind of connection — it would basically never stop buffering. Even 20 megabytes per second is asking too much by a long shot. 2 is doable — slightly under the 25 megabit speed (that’s bits… divide by 8 to get bytes) we use to define broadband download speeds.

So how do you turn a large file into a small one? Compression — we’ve been doing it for a long time, and video, though different from other types of data in some ways, is still just a bunch of zeroes and ones. In fact it’s especially susceptible to strong compression because of how one video frame is usually very similar to the last and the next one. There are all kinds of shortcuts you can take that reduce the file size immensely without noticeably impacting the quality of the video. These compression and decompression techniques fit into a system called a “codec.”

But there are exceptions to that, and one of them has to do with how compression handles color and brightness. Basically, when the image is very dark, it can’t display color very well.

Think about it like this: There are only so many ways to describe colors in a few words. If you have one word you can say red, or maybe ochre or vermilion depending on your interlocutor’s vocabulary. But if you have two words you can say dark red, darker red, reddish black, and so on. The codec has a limited vocabulary as well, though its “words” are the numbers of bits it can use to describe a pixel.

This lets it succinctly describe a huge array of colors with very little data by saying, this pixel has this bit value of color, this much brightness, and so on. (I didn’t originally want to get into this, but this is what people are talking about when they say bit depth, or even “highest quality pixels.)

But this also means that there are only so many gradations of color and brightness it can show. Going from a very dark grey to a slightly lighter grey, it might be able to pick 5 intermediate shades. That’s perfectly fine if it’s just on the hem of a dress in the corner of the image. But what if the whole image is limited to that small selection of shades?

Then you get what we see last night. See how Jon (I think) is made up almost entirely of only a handful of different colors (brightnesses of a similar color, really) in with big obvious borders between them?

Then you get what we see last night. See how Jon (I think) is made up almost entirely of only a handful of different colors (brightnesses of a similar color, really) in with big obvious borders between them?

This issue is called “banding,” and it’s hard not to notice once you see how it works. Images on video can be incredibly detailed, but places where there are subtle changes in color — often a clear sky or some other large but mild gradient — will exhibit large stripes as the codec goes from “darkest dark blue” to “darker dark blue” to “dark blue,” with no “darker darker dark blue” in between.

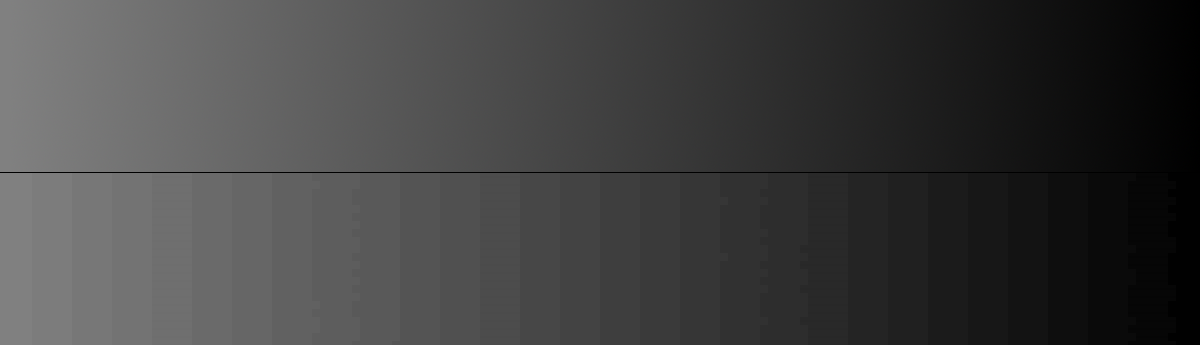

Check out this image.

Above is a smooth gradient encoded with high color depth. Below that is the same gradient encoded with lossy JPEG encoding — different from what HBO used, obviously, but you get the idea.

Above is a smooth gradient encoded with high color depth. Below that is the same gradient encoded with lossy JPEG encoding — different from what HBO used, obviously, but you get the idea.

Banding has plagued streaming video forever, and it’s hard to avoid even in major productions — it’s just a side effect of representing color digitally. It’s especially distracting because obviously our eyes don’t have that limitation. A high-definition screen may actually show more detail than your eyes can discern from couch distance, but color issues? Our visual systems flag them like crazy. You can minimize it, but it’s always going to be there, until the point when we have as many shades of grey as we have pixels on the screen.

So back to last night’s episode. Practically the entire show took place at night, which removes about 3/4 of the codec’s brightness-color combos right there. It also wasn’t a particularly colorful episode, a directorial or photographic choice that highlighted things like flames and blood, but further limited the ability to digitally represent what was on screen.

It wouldn’t be too bad if the background was black and people were lit well so they popped out, though. The last straw was the introduction of the cloud, fog, or blizzard, whatever you want to call it. This kept he brightness of the background just high enough that the codec had to represent it with one of its handful of dark greys, and the subtle movements of fog and smoke came out as blotchy messes (often called “compression artifacts” as well) as the compression desperately tried to pick what shade was best for a group of pixels.

Just brightening it doesn’t fix things, either — because the detail is already crushed into a narrow range of values, you just get a bandy image that never gets completely black, making it look washed out, as you see here:

(Anyway, the darkness is a stylistic choice. You may not agree with it, but that’s how it’s supposed to look and messing with it beyond making the darkest details visible could be counterproductive.)

Now, it should be said that compression doesn’t have to be this bad. For one thing, the more data it is allowed to use, the more gradations it can describe, and the less severe the banding. It’s also possible (though I’m not sure where it’s actually done) to repurpose the rest of the codec’s “vocabulary” to describe a scene where its other color options are limited. That way the full bandwidth can be used to describe a nearly monochromatic scene even though strictly speaking it should be only using a fraction of it.

But neither of these are likely an option for HBO: Increasing the bandwidth of the stream is costly, since this is being sent out to tens of millions of people — a bitrate increase big enough to change the quality would also massively swell their data costs. When you’re distributing to that many people, that also introduces the risk of hated buffering or errors in playback, which are obviously a big no-no. It’s even possible that HBO lowered the bitrate because of network limitations — “Game of Thrones” really is on the frontier of digital distribution.

And using an exotic codec might not be possible because only commonly used commercial ones are really capable of being applied at scale. Kind of like how we try to use standard parts for cars and computers.

This episode almost certainly looked fantastic in the mastering room and FX studios, where they not only had carefully calibrated monitors with which to view it but also were working with brighter footage (it would be darkened to taste by the colorist) and less or no compression. They might not even have seen the “final” version that fans “enjoyed.”

We’ll see the better copy eventually, but in the meantime the choice of darkness, fog, and furious action meant the episode was going to be a muddy, glitchy mess on home TVs.

And while we’re on the topic…

Well… to be honest, it might be that too. What I can tell you is that simply having a “better” TV by specs, such as 4K or a higher refresh rate or whatever, would make almost no difference in this case. Even built-in de-noising and de-banding algorithms would be hard pressed to make sense of “The Long Night.” And one of the best new display technologies, OLED, might even make it look worse! Its “true blacks” are much darker than an LCD’s backlit blacks, so the jump to the darkest grey could be way more jarring.

Well… to be honest, it might be that too. What I can tell you is that simply having a “better” TV by specs, such as 4K or a higher refresh rate or whatever, would make almost no difference in this case. Even built-in de-noising and de-banding algorithms would be hard pressed to make sense of “The Long Night.” And one of the best new display technologies, OLED, might even make it look worse! Its “true blacks” are much darker than an LCD’s backlit blacks, so the jump to the darkest grey could be way more jarring.

That said, it’s certainly possible that your TV is also set up poorly. Those of us sensitive to this kind of thing spend forever fiddling with settings and getting everything just right for exactly this kind of situation.

Now who’s “wasting his time” calibrating his TV?

— John Siracusa (@siracusa) April 29, 2019

Usually “calibration” is actually a pretty simple process of making sure your TV isn’t on the absolute worst settings, which unfortunately many are out of the box. Here’s a very basic three-point guide to “calibrating” your TV:

Unfortunately none of these things will make “The Long Night” look any better until HBO releases a new version of it. Those ugly bands and artifacts are baked right in. But if you have to blame anyone, blame the streaming infrastructure that wasn’t prepared for a show taking risks in its presentation, risks I would characterize as bold and well executed, unlike the writing in the show lately. Oops, sorry, couldn’t help myself.

If you really want to experience this show the way it was intended, the fanciest TV in the world wouldn’t have helped last night, though when the Blu-ray comes out you’ll be in for a treat. But here’s hoping the next big battle takes place in broad daylight.

Come comment on this article: Here’s everything we know about the Galaxy Note 10: Design, specs, release date, and more

Come comment on this article: Here’s the modern Motorola RAZR, massive chin and all

Come comment on this article: The Galaxy Note 10 Pro (without 5G) will have a very fast charging 4500mAh battery

Come comment on this article: Android Q might bring new Notification Assistants to better manage your phone’s notifications

Come comment on this article: Redmi teases upcoming value flagship with popup selfie camera

This could be the upcoming Motorola Razr revival. The images purporting to be the upcoming smartphone appeared online on Weibo and show a foldable design. Unlike Galaxy Fold, though, Motorola’s implementation has the phone folding vertical — much like the original Razr.

This design offers a more compelling use case than other foldables. Instead of traditional smartphone unfolding to a tablet-like display, Motorola’s design has a smaller device unfolding to a smartphone display. The result is a smaller phone turning into a normal phone.

Pricing is still unclear but the WSJ previously stated it would carry a $1,500 cost when it’s eventually released. If it’s released.

Samsung was the first to market with the Galaxy Fold. Kind of. A few journalists were given Galaxy Fold units ahead of its launch, but a handful of units failed in the first days. Samsung quickly postponed the launch and recalled all the review units.

Despite this leak, Motorola has yet to confirm when this device will hit the market. Given Samsung’s troubles, it will likely be extra cautious before launching it to the general public.

Come comment on this article: The OnePlus 7 Pro has the best display ever, apparently

Come comment on this article: The OnePlus 7 might not have a notch after all

Come comment on this article: Sony’s mobile division might be on the chopping block

Come comment on this article: Samsung is really ashamed of the Galaxy Fold’s design, asks iFixit to remove their teardown

Come comment on this article: Microsoft previews Android-to-Windows notification mirroring

Come comment on this article: Sign up for the closed beta of Mario Kart Tour before its official release this summer

Come comment on this article: Android Q’s new ‘Scoped Storage’ permissions will be optional

Come comment on this article: [TA Deals] Name your price and pick up the Learn to Design bundle for cheap

If you’re a student at UC Berkeley, the diminutive rolling robots from Kiwi are probably a familiar sight by now, trundling along with a burrito inside to deliver to a dorm or apartment building. Now students at a dozen more campuses will be able to join this great, lazy future of robotic delivery as Kiwi expands to them with a clever student-run model.

Speaking at TechCrunch’s Robotics/AI Session at the Berkeley campus, Kiwi’s Felipe Chavez and Sasha Iatsenia discussed the success of their burgeoning business and the way they planned to take it national.

In case you’re not aware of the Kiwi model, it’s basically this: When you place an order online with a participating restaurant, you have the option of delivery via Kiwi. If you so choose, one of the company’s fleet of knee-high robots with insulated, locking storage compartments will swing by the place, your order is put within, and it brings it to your front door (or as close as it can reasonably get). You can even watch the last bit live from the robot’s perspective as it rolls up to your place.

The robots are what Kiwi calls “semi-autonomous.” This means that although they can navigate most sidewalks and avoid pedestrians, each has a human monitoring it and setting waypoints for it to follow, on average every five seconds. Iatsenia told me that they’d tried going full autonomous and that it worked… most of the time. But most of the time isn’t good enough for a commercial service, so they’ve got humans in the loop. They’re working on improving autonomy but for now this is how it is.

![]() That the robots are being controlled in some fashion by a team of people in Colombia (where the co-founders hail from) does take a considerable amount of the futurism out of this endeavor, but on reflection it’s kind of a natural evolution of the existing delivery infrastructure. After all, someone has to drive the car that brings you your food as well. And in reality most AI is operated or informed directly or indirectly by actual people.

That the robots are being controlled in some fashion by a team of people in Colombia (where the co-founders hail from) does take a considerable amount of the futurism out of this endeavor, but on reflection it’s kind of a natural evolution of the existing delivery infrastructure. After all, someone has to drive the car that brings you your food as well. And in reality most AI is operated or informed directly or indirectly by actual people.

That those drivers are in South America operating multiple vehicles at a time is a technological advance over your average delivery vehicle — though it must be said that there is an unsavory air of offshoring labor to save money on wages. That said, few people shed tears over the wages earned by the Chinese assemblers who put together our smartphones and laptops, or the garbage pickers who separate your poorly sorted recycling. The global labor economy is a complicated one, and the company is making jobs in the place it was at least partly born.

Whatever the method, Kiwi has traction: it’s done more than 50,000 deliveries and the model seems to have proven itself. Customers are happy, they get stuff delivered more than ever once they get the app, and there are fewer and fewer incidents where a robot is kicked over or, you know, catches on fire. Notably, the founders said on stage, the community has really adopted the little vehicles, and should one overturn or be otherwise interfered with, it’s often set on its way soon after by a passerby.

Iatsenia and Chavez think the model is ready to push out to other campuses, where a similar effort will have to take place — but rather than do it themselves by raising millions and hiring staff all over the country, they’re trusting the robotics-loving student groups at other universities to help out.

Iatsenia and Chavez think the model is ready to push out to other campuses, where a similar effort will have to take place — but rather than do it themselves by raising millions and hiring staff all over the country, they’re trusting the robotics-loving student groups at other universities to help out.

For a small and low-cash startup like Kiwi, it would be risky to overextend by taking on a major round and using that to scale up. They started as robotics enthusiasts looking to bring something like this to their campus, so why can’t they help others do the same?

So the team looked at dozens of universities, narrowing them down by factors important to robotic delivery: layout, density, commercial corridors, demographics, and so on. Ultimately they arrived at the following list:

What they’re doing is reaching out to robotics clubs and student groups at those colleges to see who wants to take partial ownership of Kiwi administration out there. Maintenance and deployment would still be handled by Berkeley students, but the student clubs would go through a certification process and then do the local work, like a capsized bot and on-site issues with customers and restaurants.

“We are exploring several options to work with students down the road including rev share,” Iatsenia told me. “It depends on the campus.”

So far they’ve sent out 40 robots to the 12 campuses listed and will be rolling out operations as the programs move forward on their own time. If you’re not one of the unis listed, don’t worry — if this goes the way Kiwi plans, it sounds like you can expect further expansion soon.

Come comment on this article: No surprise: the Galaxy Fold is really fragile and tough to repair, according to iFixit

Come comment on this article: [TA Deals] Save almost 80% on the Essential PC Utility bundle to keep your computer in top shape

Come comment on this article: Android on Chrome OS will (probably) see an update to Android Q

Come comment on this article: NVIDIA apparently working on new Android tablet with desktop mode

Come comment on this article: The new Meizu 16s is my favourite ‘bezelless’ design so far

Come comment on this article: Google rebrands Data Saver to Lite Mode in Chrome to help stretch your data caps and improve performance

Braille is a crucial skill for children with visual impairments to learn, and with these LEGO Braille Bricks kids can learn through hands-on play rather than more rigid methods like Braille readers and printouts. Given the naturally Braille-like structure of LEGO blocks, it’s surprising this wasn’t done decades ago.

The truth is, however, that nothing can be obvious enough when it comes to marginalized populations like people with disabilities. But sometimes all it takes is someone in the right position to say “You know what? That’s a great idea and we’re just going to do it.”

It happened with the BecDot (above) and it seems to have happened at LEGO. Stine Storm led the project, but Morten Blonde, who himself suffers from degenerating vision, helped guide the team with the passion and insight that only comes with personal experience.

In some remarks sent over by LEGO, Blonde describes his drive to help:

When I was contacted by the LEGO Foundation to function as internal consultant on the LEGO Braille Bricks project, and first met with Stine Storm, where she showed me the Braille bricks for the first time, I had a very emotional experience. While Stine talked about the project and the blind children she had visited and introduced to the LEGO Braille Bricks I got goose bumps all over the body. I just knew that I had to work on this project.

I want to help all blind and visually impaired children in the world dare to dream and see that life has so much in store for them. When, some years ago, I was hit by stress and depression over my blind future, I decided one day that life is too precious for me not to enjoy every second of. I would like to help give blind children the desire to embark on challenges, learn to fail, learn to see life as a playground, where anything can come true if you yourself believe that they can come true. That is my greatest ambition with my participation in the LEGO Braille Bricks project

The bricks themselves are very like the originals, specifically the common 2×4 blocks, except they don’t have the full 8 “studs” (so that’s what they’re called). Instead, they have the letters of the Braille alphabet, which happens to fit comfortably in a 2×3 array of studs, with room left on the bottom to put a visual indicator of the letter or symbol for sighted people.

It’s compatible with ordinary LEGO bricks and of course they can be stacked and attached themselves, though not with quite the same versatility as an ordinary block, since some symbols will have fewer studs. You’ll probably want to keep them separate, since they’re more or less identical unless you inspect them individually.

[gallery ids="1816767,1816769,1816776,1816772,1816768"]

All told the set, which will be provided for free to institutions serving vision-impaired students, will include about 250 pieces: A-Z (with regional variants), the numerals 0-9, basic operators like + and =, and some “inspiration for teaching and interactive games.” Perhaps some specialty pieces for word games and math toys, that sort of thing.

LEGO was already one of the toys that can be enjoyed equally by sighted and vision-impaired children, but this adds a new layer, or I suppose just re-engineers an existing and proven one, to extend and specialize the decades-old toy for a group that already seems already to have taken to it:

“The children’s level of engagement and their interest in being independent and included on equal terms in society is so evident. I am moved to see the impact this product has on developing blind and visually impaired children’s academic confidence and curiosity already in its infant days,” said Blonde.

Danish, Norwegian, English, and Portuguese blocks are being tested now, with German, Spanish and French on track for later this year. The kit should ship in 2020 — if you think your classroom could use these, get in touch with LEGO right away.

Come comment on this article: Did we really need a followup to the Samsung Galaxy View?

It’s been a month since Huawei unveiled its latest flagship device — the Huawei P30 Pro. I’ve played with the P30 and P30 Pro for a few weeks and I’ve been impressed with the camera system.

The P30 Pro is the successor to the P20 Pro and features improvements across the board. It could have been a truly remarkable phone, but some issues still hold it back compared to more traditional Android phones, such as the Google Pixel 3 or OnePlus 6T.

The P30 Pro is by far the most premium device in the P line. It features a gigantic 6.47-inch OLED display, a small teardrop notch near the top, an integrated fingerprint sensor in the display and a lot of cameras.

Before diving into the camera system, let’s talk about the overall feel of the device. Compared to last year’s P20 Pro, the company removed the fingerprint sensor at the bottom of the screen and made the notch smaller. The fingerprint sensor doesn’t perform as well as a dedicated fingerprint sensor, but it gets the job done.

It has become hard to differentiate smartphones based on design as it looks a lot like the OnePlus 6T or the Samsung Galaxy S10. The display features a 19.5:9 aspect ratio with a 2340×1080 resolution, and it is curved around the edges.

The result is a phone with gentle curves. The industrial design is less angular, even though the top and bottom edges of the device have been flattened. Huawei uses an aluminum frame and a glass with colorful gradients on the back of the device.

Unfortunately, the curved display doesn’t work so well in practice. If you open an app with a unified white background, such as Gmail, you can see some odd-looking shadows near the edges.

Below the surface, the P30 Pro uses a Kirin 980 system-on-a-chip. Huawei’s homemade chip performs well. To be honest, smartphones have been performing well for a few years now. It’s hard to complain about performance anymore.

The phone features a headphone jack, a 40W USB-C charging port, an impressive 4,200 mAh battery. For the first time, Huawei added wireless charging to the P series (up to 15W).

You can also charge another phone or an accessory with reverse wireless charging, just like on the Samsung Galaxy S10. Unfortunately, you have to manually activate the feature in the settings every time you want to use it.

Huawei has also removed the speaker grill at the top of the display. The company now vibrates the screen in order to turn the screen into a tiny speaker for your calls. In my experience, it works well.

While the phone ships with Android Pie 9.1, Huawei still puts a lot of software customization with its EMUI user interface. There are a dozen useless Huawei apps that probably make sense in China, but don’t necessarily need to be there if you use Google apps.

For instance, the HiCare app keeps sending me notifications. The onboarding process is still also quite confusing as some screens refer to Huawei features while others refer to standard Android features. It definitely won’t be a good experience for non tech-savvy people.

The P20 Pro already had some great camera sensors and paved the way for night photos in recent Android devices. The P30 Pro camera system can be summed up in two words — more and better.

The P30 Pro now features not one, not two, not three but f-o-u-r sensors on the back of the device.

It has become a sort of a meme already — yes, the zoom works incredibly well on the P30 Pro. In addition to packing a lot of megapixels in the main sensor, the company added a telephoto lens with a periscope design. The sensor features a mirror to beam the light at a right angle and put more layers of glass in the sensor without making the phone too thick.

And it works incredibly well in daylight. Unfortunately, you won’t be able to use the telephoto lens at night as it doesn’t performa as well as the main camera.

The company also combines the main camera sensor with the telephoto sensor to let you capture photos with a 10x zoom with a hybrid digital-optical zoom.

In addition to hardware improvements, Huawei has also worked on the algorithms that process your shots. Night mode performs incredibly well. You just have to hold your phone for 8 seconds so that it can capture as much light as possible. Here’s what it looks like in a completely dark room vs. an iPhone X:

Huawei has also improved HDR processing and portrait photos. That new time-of-flight sensor works well when it comes to distinguishing a face from the background for instance.

Once again, Huawei is a bit too heavy-handed with post-processing. If you use your camera with the Master AI setting, colors are too saturated. The grass appears much greener than it is in reality. Skin smoothing with the selfie camera still feels weird too. The phone also aggressively smoothes surfaces on dark shots.

When you pick a smartphone brand, you also pick a certain photography style. I’m not a fan of saturated photos, so Huawei’s bias toward unnatural colors doesn’t work in my favor.

But if you like extremely vivid shots with insanely good sensors the P30 Pro is for you. That array of lenses also opens up a lot of possibilities and gives you more flexibility.

The P30 Pro isn’t available in the U.S. But the company has already covered the streets of major European cities with P30 Pro ads. It costs P30 Pro for €999 ($1,130) for 128GB of storage — there are more expensive options with more storage.

Huawei also unveiled a smaller device — the P30. It’s always interesting to look at the compromises of the more affordable model.

On that front, there’s a lot to like about the P30. For €799 ($900) with 128GB, you get a solid phone. It has a 6.1-inch OLED display and shares a lot of specifications with its bigger version.

The P30 features the same system-on-a-chip, the same teardrop notch, the same fingerprint sensor in the display, the same screen resolution. Surprisingly, the P30 Pro doesn’t have a headphone jack while the P30 has one.

There are some things you won’t find on the P30, such as wireless charging or the curved display. While the edges of the device are slightly curved, the display itself is completely flat. And I think it looks better.

Cameras are slightly worse on the P30, and you won’t be able to zoom in as aggressively. Here’s the full rundown:

In the end, it really depends what you’re looking for. The P30 Pro definitely has the best cameras of the P series. But the P30 is also an attractive phone for those looking for a smaller device.

Huawei has once again pushed the limits of what you can pack in a smartphone when it comes to cameras. While iOS and Android are more mature than ever, it’s fascinating to see that hardware improvements are not slowing down.

Come comment on this article: [TA Deals] Take a huge discount on an Ivacy VPN subscription (96% off)

Come comment on this article: Lenovo unwraps the cheap flagship Z6 Pro with 100 megapixels worth of cameras

Wing Aviation, the drone-based delivery startup born out of Google’s X labs, has received the first FAA certification in the country for commercial carriage of goods. It might not be long before you’re getting your burritos sent par avion.

The company has been performing tests for years, making thousands of flights and supervised deliveries to show that its drones are safe and effective. Many of those flights were in Australia, where in suburban Canberra the company recently began its first commercial operations. Finland and other countries are also in the works..

Wing’s first operations, starting later this year, will be in Blackburg and Christiansburg, VA; obviously an operation like this requires close coordination with municipal authorities as well as federal ones. You can’t just get a permission slip from the FAA and start flying over everyone’s houses.

“Wing plans to reach out to the local community before it begins food delivery, to gather feedback to inform its future operations,” the FAA writes in a press release. Here’s hoping that means you can choose whether or not these loud little aircraft will be able to pass through your airspace.

Although the obvious application is getting a meal delivered quick even when traffic is bad, there are plenty of other applications. One imagines quick delivery of medications ahead of EMTs, or blood being transferred quickly between medical centers.

I’ve asked Wing for more details on its plans to roll this out elsewhere in the U.S., and will update this story if I hear back.

Come comment on this article: [TA Deals] Save 94% on the CompTIA Cybersecurity bundle