Apple always drops a few whoppers at its events, and the iPhone XS announcement today was no exception. And nowhere were they more blatant than in the introduction of the devices’ “new” camera features. No one doubts that iPhones take great pictures, so why bother lying about it? My guess is they can’t help themselves.

Now, to fill this article out I had to get a bit pedantic, but honestly, some of these are pretty egregious.

“The world’s most popular camera”

There are a lot of iPhones out there, to be sure. But defining the iPhone as some sort of decade-long continuous camera, which Apple seems to be doing, is sort of a disingenuous way to do it. By that standard, Samsung would almost certainly be ahead, since it would be allowed to count all its Galaxy phones going back a decade as well, and they’ve definitely outsold Apple in that time. Going further, if you were to say that a basic off-the-shelf camera stack and common Sony or Samsung sensor was a “camera,” iPhone would probably be outnumbered 10:1 by Android phones.

There are a lot of iPhones out there, to be sure. But defining the iPhone as some sort of decade-long continuous camera, which Apple seems to be doing, is sort of a disingenuous way to do it. By that standard, Samsung would almost certainly be ahead, since it would be allowed to count all its Galaxy phones going back a decade as well, and they’ve definitely outsold Apple in that time. Going further, if you were to say that a basic off-the-shelf camera stack and common Sony or Samsung sensor was a “camera,” iPhone would probably be outnumbered 10:1 by Android phones.

Is the iPhone one of the world’s most popular cameras? To be sure. Is it the world’s most popular camera? You’d have to slice it pretty thin and say that this or that year and this or that model was more numerous than any other single model. The point is this is a very squishy metric and one many could lay claim to depending on how they pick or interpret the numbers. As usual, Apple didn’t show their work here, so we may as well coin a term and call this an educated bluff.

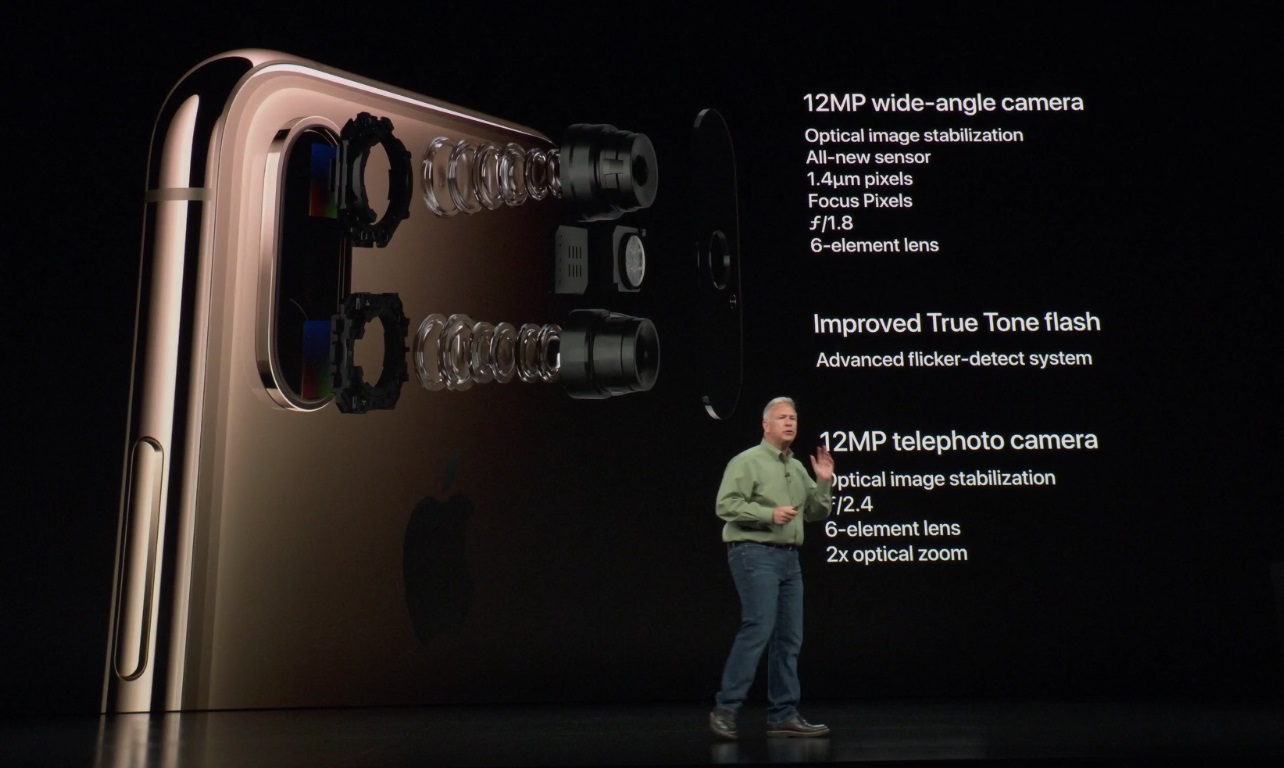

“Remarkable new dual camera system”

As Phil would explain later, a lot of the newness comes from improvements to the sensor and image processor. But as he said that the system was new while backed by an exploded view of the camera hardware, we may consider him as referring to that as well.

As Phil would explain later, a lot of the newness comes from improvements to the sensor and image processor. But as he said that the system was new while backed by an exploded view of the camera hardware, we may consider him as referring to that as well.

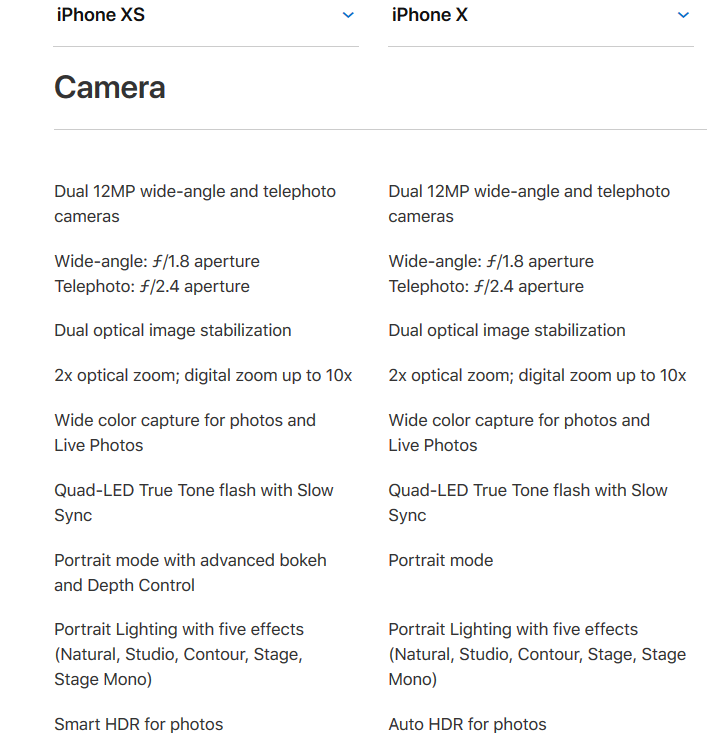

It’s not actually clear what in the hardware is different from the iPhone X. Certainly if you look at the specs, they’re nearly identical:

If I said these were different cameras, would you believe me? Same F numbers, no reason to think the image stabilization is different or better, and so on. It would not be unreasonable to guess that these are, as far as optics, the same cameras as before. Again, not that there was anything wrong with them — they’re fabulous optics. But showing components that are in fact the same and saying it’s different is misleading.

If I said these were different cameras, would you believe me? Same F numbers, no reason to think the image stabilization is different or better, and so on. It would not be unreasonable to guess that these are, as far as optics, the same cameras as before. Again, not that there was anything wrong with them — they’re fabulous optics. But showing components that are in fact the same and saying it’s different is misleading.

Given Apple’s style, if there were any actual changes to the lenses or OIS, they’d have said something. It’s not trivial to improve those things and they’d take credit if they had done so.

The sensor of course is extremely important, and it is improved: the 1.4-micrometer pixel pitch on the wide-angle main camera is larger than the 1.22-micrometer pitch on the X. Since the megapixels are similar we can probably surmise that the “larger” sensor is a consequence of this different pixel pitch, not any kind of real form factor change. It’s certainly larger, but the wider pixel pitch, which helps with sensitivity, is what’s actually improved, and the increased dimensions are just a consequence of that.

We’ll look at the image processor claims below.

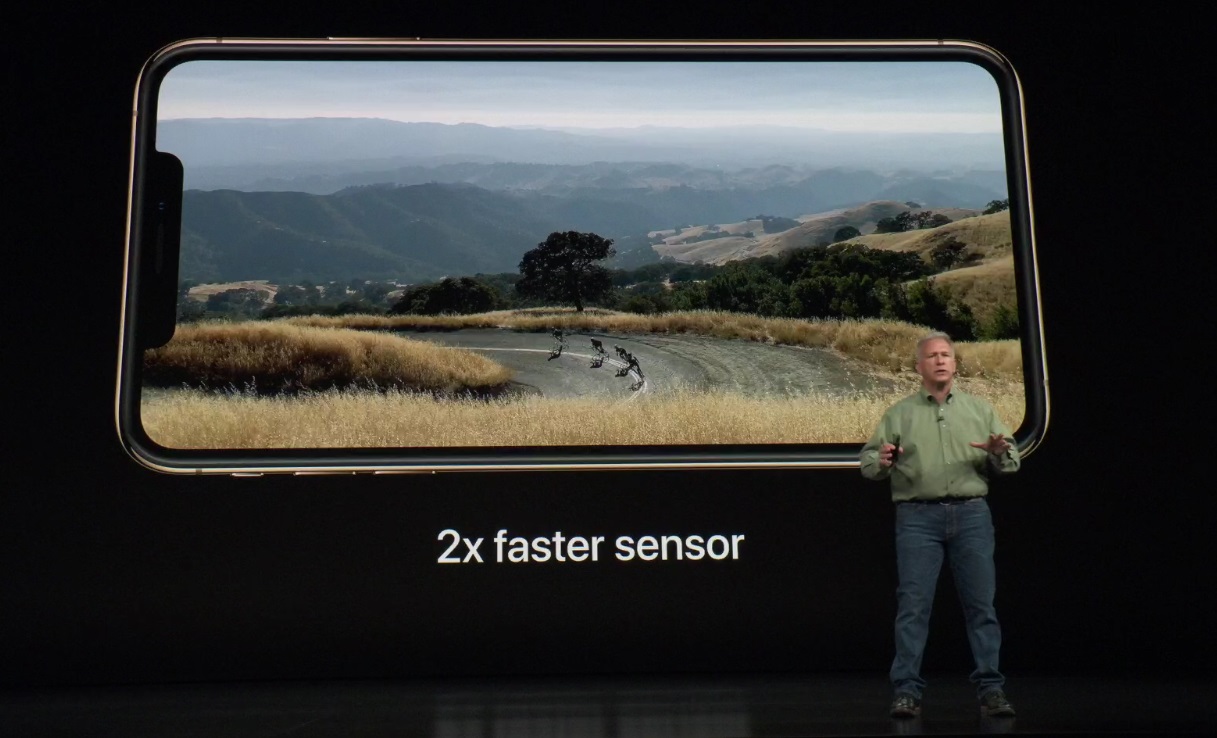

“2x faster sensor… for better image quality”

It’s not really clear what is meant when he says this. “To take advantage of all this technology.” Is it the readout rate? Is it the processor that’s faster, since that’s what would probably produce better image quality (more horsepower to calculate colors, encode better, and so on)? “Fast” also refers to light-gathering — is that faster?

It’s not really clear what is meant when he says this. “To take advantage of all this technology.” Is it the readout rate? Is it the processor that’s faster, since that’s what would probably produce better image quality (more horsepower to calculate colors, encode better, and so on)? “Fast” also refers to light-gathering — is that faster?

I don’t think it’s accidental that this was just sort of thrown out there and not specified. Apple likes big simple numbers and doesn’t want to play the spec game the same way as the others. But this in my opinion crosses the line from simplifying to misleading. This at least Apple or some detailed third party testing can clear up.

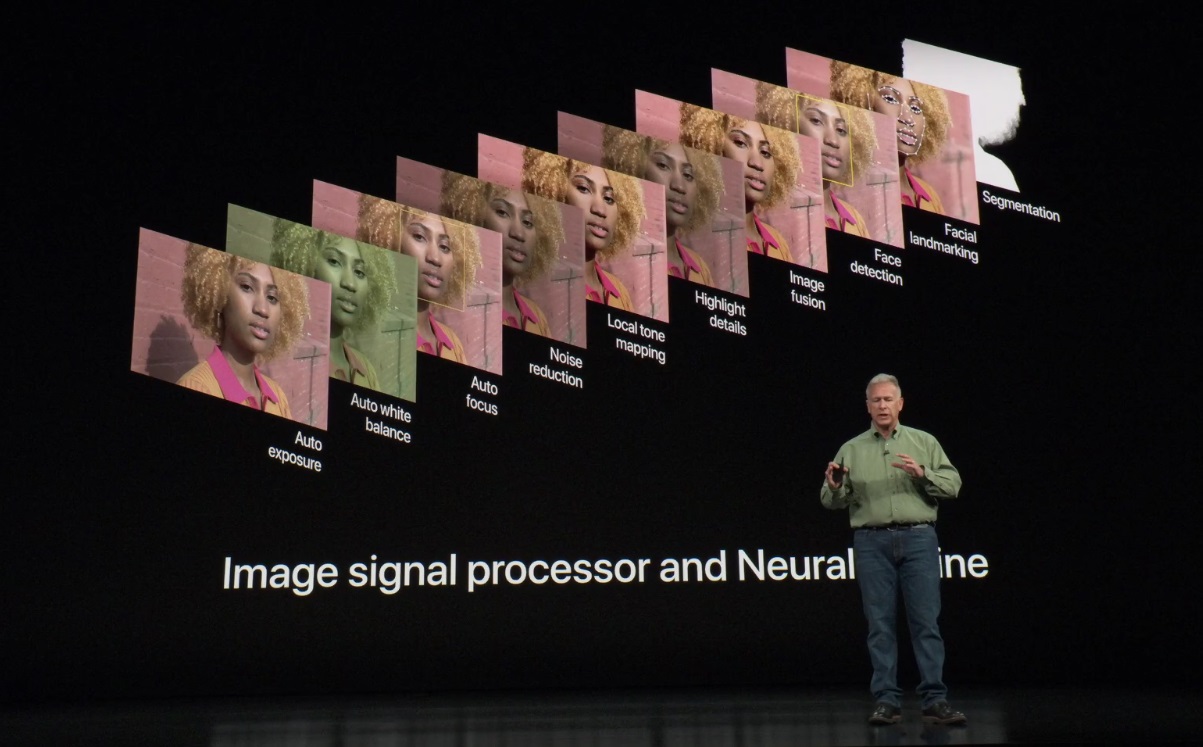

“What it does that is entirely new is connect together the ISP with that neural engine, to use them together.”

Now, this was a bit of sleight of hand on Phil’s part. Presumably what’s new is that Apple has better integrated the image processing pathway between the traditional image processor, which is doing the workhorse stuff like autofocus and color, and the “neural engine,” which is doing face detection.

Now, this was a bit of sleight of hand on Phil’s part. Presumably what’s new is that Apple has better integrated the image processing pathway between the traditional image processor, which is doing the workhorse stuff like autofocus and color, and the “neural engine,” which is doing face detection.

It may be new for Apple, but this kind of thing has been standard in many cameras for years. Both phones and interchangeable-lens systems like DSLRs use face and eye detection, some using neural-type models, to guide autofocus or exposure. This (and the problems that come with it) go back years and years. I remember point-and-shoots that had it, but unfortunately failed to detect people who had dark skin or were frowning.

It’s gotten a lot better (Apple’s depth-detecting units probably help a lot), but the idea of tying a face-tracking system, whatever fancy name you call it, in to the image-capture process is old hat. It’s probably not “entirely new” even for Apple, let alone the rest of photography.

“We have a brand new feature we call smart HDR.”

Apple’s brand new feature has been on Google’s Pixel phones for a while now. A lot of cameras now keep a frame buffer going, essentially snapping pictures in the background while the app is open, then using the latest one when you hit the button. And Google, among others, had the idea that you could use these unseen pictures as raw material for an HDR shot.

Apple’s brand new feature has been on Google’s Pixel phones for a while now. A lot of cameras now keep a frame buffer going, essentially snapping pictures in the background while the app is open, then using the latest one when you hit the button. And Google, among others, had the idea that you could use these unseen pictures as raw material for an HDR shot.

Probably Apple’s method is a little different, but fundamentally it’s the same thing. Again, “brand new” to iPhone users, but well known among Android flagship devices.

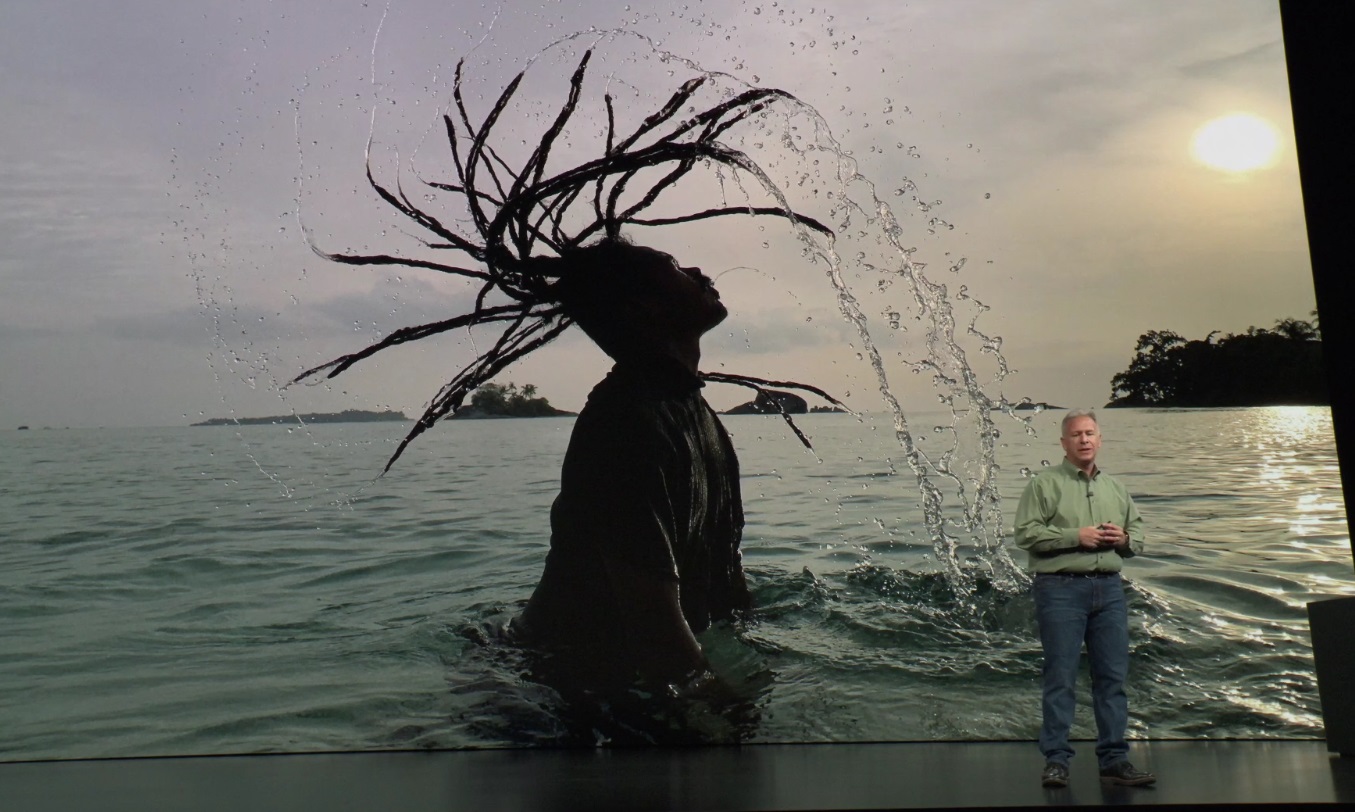

“This is what you’re not supposed to do, right, shooting a photo into the sun, because you’re gonna blow out the exposure.”

I’m not saying you should shoot directly into the sun, but it’s really not uncommon to include the sun in your shot. In the corner like that it can make for some cool lens flares, for instance. It won’t blow out these days because almost every camera’s auto-exposure algorithms are either center-weighted or intelligently shift around — to find faces, for instance.

I’m not saying you should shoot directly into the sun, but it’s really not uncommon to include the sun in your shot. In the corner like that it can make for some cool lens flares, for instance. It won’t blow out these days because almost every camera’s auto-exposure algorithms are either center-weighted or intelligently shift around — to find faces, for instance.

When the sun is in your shot, your problem isn’t blown out highlights but a lack of dynamic range caused by a large difference between the exposure needed to capture the sun-lit background and the shadowed foreground. This is, of course, as Phil says, one of the best applications of HDR — a well-bracketed exposure can make sure you have shadow details while also keeping the bright ones.

Funnily enough, in the picture he chose here, the shadow details are mostly lost — you just see a bunch of noise there. You don’t need HDR to get those water droplets — that’s a shutter speed thing, really. It’s still a great shot, by the way, I just don’t think it’s illustrative of what Phil is talking about.

“You can adjust the depth of field… this has not been possible in photography of any type of camera.”

This just isn’t true. You can do this on the Galaxy S9, and it’s being rolled out in Google Photos as well. Lytro was doing something like it years and years ago, if we’re including “any type of camera.” I feel kind of bad that no one told Phil. He’s out here without the facts.

This just isn’t true. You can do this on the Galaxy S9, and it’s being rolled out in Google Photos as well. Lytro was doing something like it years and years ago, if we’re including “any type of camera.” I feel kind of bad that no one told Phil. He’s out here without the facts.

Well, that’s all the big ones. There were plenty more, shall we say, embellishments at the event, but that’s par for the course at any big company’s launch. I just felt like these ones couldn’t go unanswered. I have nothing against the iPhone camera — I use one myself. But boy are they going wild with these claims. Somebody’s got to say it, since clearly no one inside Apple is.

Check out the rest of our Apple event coverage here:

The 7 most egregious fibs Apple told about the iPhone XS camera today was first posted on https://techcrunch.com/gadgets/

No comments:

Post a Comment